Members of Associate Professor Dong Wang's research group, the Social Sensing and Intelligence Lab, will present their research at The ACM Web Conference 2023, which will be held from April 30 to May 4 in Austin, Texas. The conference is the premier venue to present and discuss progress in research, development, standards, and applications of topics related to the Web.

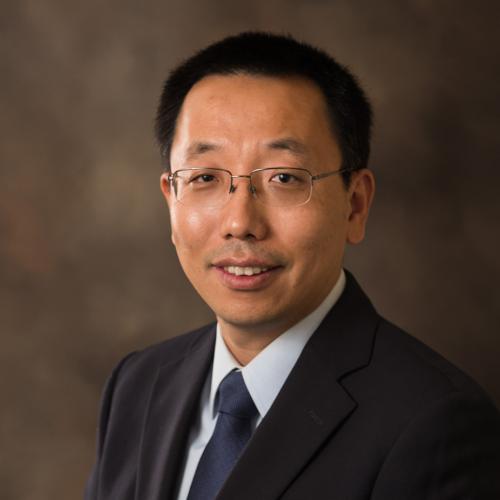

Postdoctoral researcher Yang Zhang will present his paper, "CollabEquality: A Crowd-AI Collaborative Learning Framework to Address Class-wise Inequality in Web-based Disaster Response." This work focuses on a web-based disaster response (WebDR) application that aims to acquire real-time situation awareness of disaster events by collecting timely observations from the Web (e.g., social media). In this paper, the researchers focused on addressing the limitations of current WebDR solutions that often have imbalanced classification performance across different disaster situation awareness categories. To that end, they introduced a principled crowd-AI collaborative learning framework that effectively identifies the biased AI results and develops an estimation framework to model and address the class-wise inequality problem using crowd intelligence. The CollabEquality is shown to significantly reduce class-wise inequality while improving the WebDR classification accuracy through experiments on multiple real-world disaster applications. The researchers believe the framework provides useful insights into addressing the class-wise inequality problem in many AI-driven classification applications, such as document classification, misinformation detection, and recommender systems.

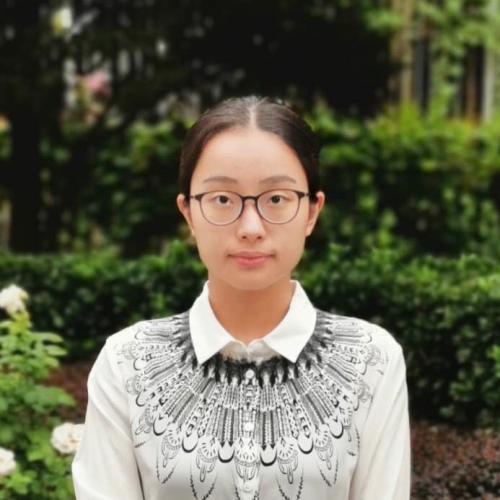

PhD student Ruohan Zong will present her paper, "ContrastFaux: Sparse Semi-supervised Fauxtography Detection on the Web using Multi-view Contrastive Learning." The work focuses on a critical problem of the widespread misinformation on the Web that has raised many concerns with far-reaching societal consequences. In this paper, the researchers studied a critical type of online misinformation, namely fauxtography, where the image and associated text of a social media post jointly convey a questionable or false sense. They developed ContrastFaux, a multi-view contrastive learning framework that jointly extracts the shared fauxtography-related semantic information in the image and text in multi-modal posts to detect fauxtography. Through experiments on multiple real-world datasets, the proposed framework was shown to be effective in accurately identifying fauxtography on social media. The researchers believe that their work can also provide useful insights into using contrastive learning to learn shared semantic information in other social good applications where critical information is embedded in multiple data modalities, such as intelligence transportation and urban infrastructure monitoring.

The primary research focus of the Social Sensing and Intelligence Lab lies in the emerging area of human-centered AI, AI for social good, and cyber-physical systems in social spaces. The lab develops interdisciplinary theories, techniques, and tools for fundamentally understanding, modeling, and evaluating human-centered computing and information (HCCI) systems, and for accurately reconstructing the correct "state of the world," both physical and social.